Leonardo

LEONARDO

The new pre-exascale Tier-0 EuroHPC supercomputer

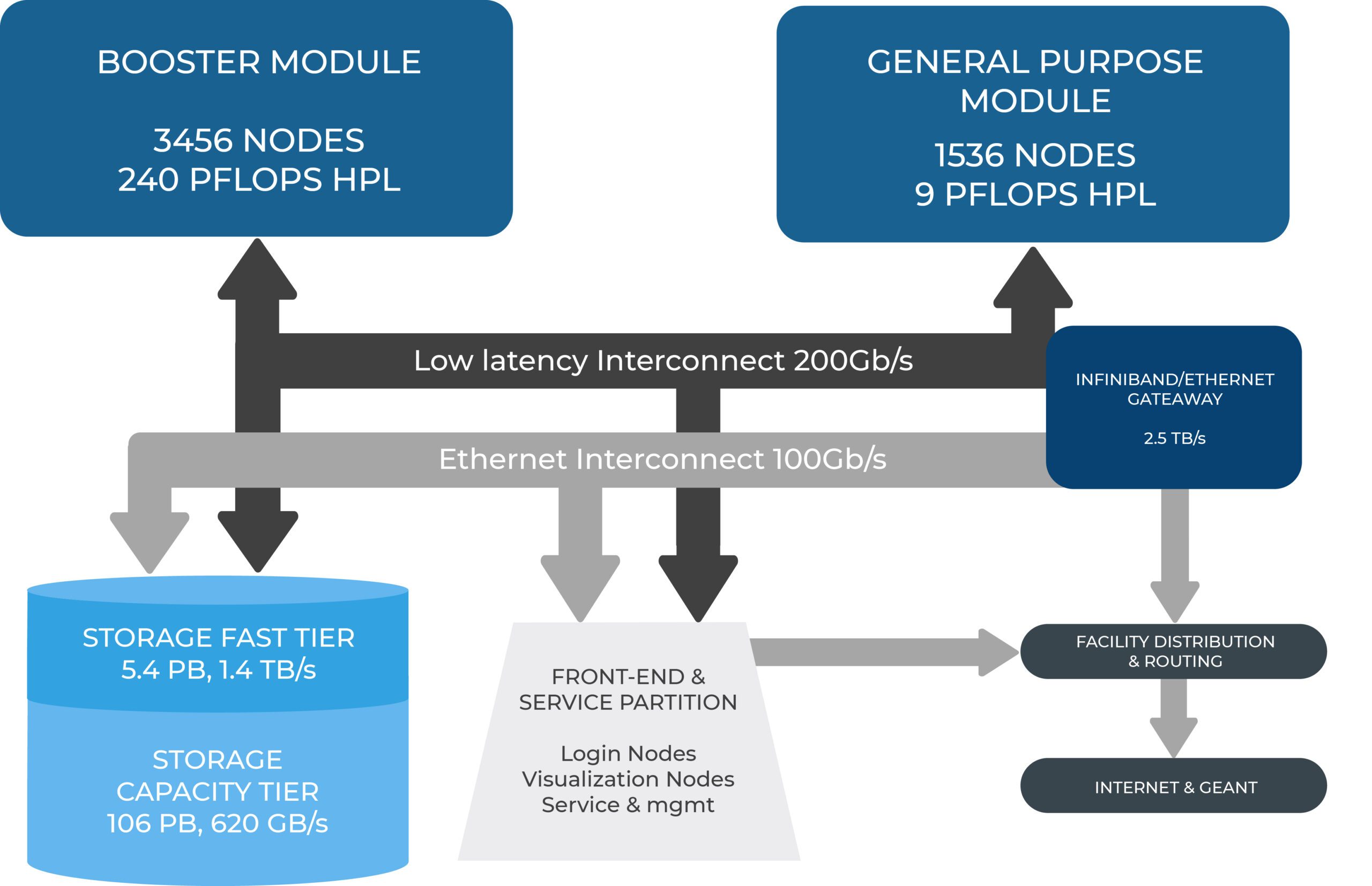

The pre-exascale Tier-0 EuroHPC supercomputer LEONARDO is classified in 7° position among the most powerful supercomputers in the Top500 List. It is hosted by Cineca and is currently built in the Bologna Technopole. It is supplied by ATOS, with two main partitions: Booster Module and Data-centric Module. The booster module partition is based on BullSequana XH2135 supercomputer nodes, each with four NVIDIA Tensor Core GPUs and a single Intel CPU. The Data-centric partition is based on BullSequana X2140 three-node CPU Blade and is equipped with two Intel Sapphire Rapids CPUs, each with 56 cores.

The overall system also uses NVIDIA Mellanox HDR 200Gb/s InfiniBand connectivity, with smart in-network computing acceleration engines that enable extremely low latency and high data throughput to provide the highest AI and HPC application performance and scalability.

Compute Nodes:

-

- 4992 computing nodes organized in:

-

- Booster partition

Model: BullSequana X2135 “Da Vinci” single node GPU Blade

Nodes: 3456

Processors: single socket 32-core Intel Xeon Platinum 8358 CPU, 2.60GHz (Ice Lake)

Cores: 110592

RAM: 8×64 GB DDR4 3200 MHz (512 GB)

Accelerators: 4x NVIDIA custom Ampere A100 GPU 64GB HBM2e, NVLink 3.0 (200GB/s)

Network: 2 x dual port HDR100 per node (400Gbps/node) - Data Centric General Purpose partition

Model: BullSequana X2140 three-node CPU Blade

Nodes: 1536

Processors: Intel Saphire Rapids 2×56 cores, 2.0 GHz

Cores: 172032 (112 cores/node)

RAM: 512 (16×32) GB DDR5 4800 MHz

Network: Nvidia HDR cards 1x100Gbps/node

- Booster partition

-

- 16 visualization nodes 2 x Icelake ICP06 32cores 2.4GHz, 3 NVIDIA Tesla V100, RAM: (16 x 32) GB DDR5 4800 MHz

- 137.6 PB (raw) Large capacity storage, 620 GB/s

- High Performance Storage 5.7 PB, 1.4 TB/s Based on 31 x DDN Exascaler ES400NVX2

Login and Service nodes: 16 Login nodes are available. 16 service nodes for I/O and cluster management.

All the nodes are interconnected through an Nvidia Mellanox network, with Dragon Fly+, capable of a maximum bandwidth of 200Gbit/s between each pair of nodes.

- 4992 computing nodes organized in: